History of Rendering: Rasterization, Ray Tracing, Path Tracing

Early Techniques

The progression of rendering technologies from Rasterization to Ray Tracing to Path Tracing has been an exciting journey. Rendering techniques are constantly evolving to best take 3D models and generate an image that represents our visual impression of what we would like to see.

Our first representations were crude geometric interpretations of their worldly counterparts. Limitations of our computational ability, display representations and data storage put strong constraints on our ability to synthesize the visions in our mind’s eye. Starting from abstract spatial arrangements of shapes we incrementally began to explore more expressive algorithms and techniques to visualize the world around us.

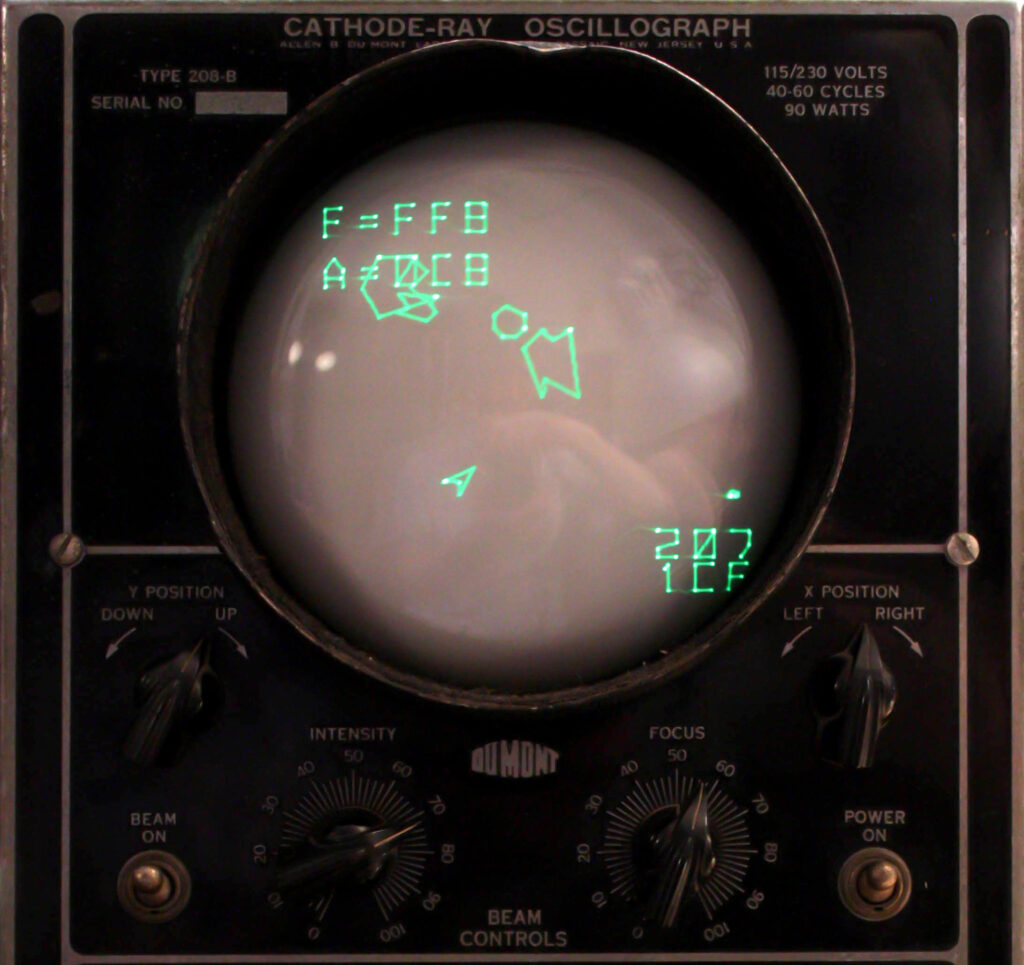

Early CAD techniques on calligraphic displays etched vectors of light onto glowing displays allowing the rotation and manipulation of rough 3D forms. With the advent of raster frame buffers, we gained the ability to represent colors and shapes became forms we could display as images. With higher resolution and greater fidelity it became possible to recreate the lights and shades of our world in a view akin to our artistic intent and approaching a more naturalistic form.

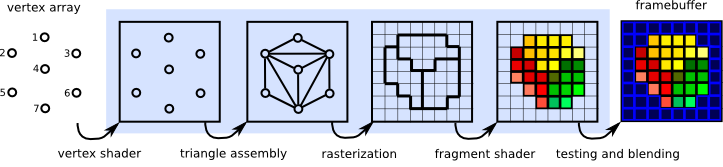

To enhance interactivity and draw ever more complicated geometries, hardware evolved to store 3D forms and to blend those shapes in depth, rendering final pixel values into the raster frame buffer. This process of Rasterization and scanline rendering became the dominant pipeline for creating synthetic imagery with hardware and in computer graphics applications.

Resolutions increased, textures, lighting and more complex shading models were introduced. Transparency, reflections and real time interaction became the norm for hardware rasterized rendering with programmable GPUs. Our algorithmic advancement outpaced the abilities of our interactive hardware. With a strong push towards photorealism, physical accuracy, more carefully simulated energy transfer and material properties much of the cutting edge rendering research was computationally beyond the abilities of current hardware or had to take advantage of very expensive workstations, distributed computing and supercomputers to complete imaging tasks.

Photorealism

To achieve photorealistic results more physically accurate techniques were explored. Early explorations in radiative transfer and finite element techniques started to broach the physics of light transport and energy exchange. One of the most important techniques to be explored was the introduction of geometric optical principles for simulating light exchange and material interactions. This is the early advent of Ray Tracing. The succinct mathematics of the physical properties of light were a perfect fit for CAD engineers wanting to program a model that was both accurate and concise.

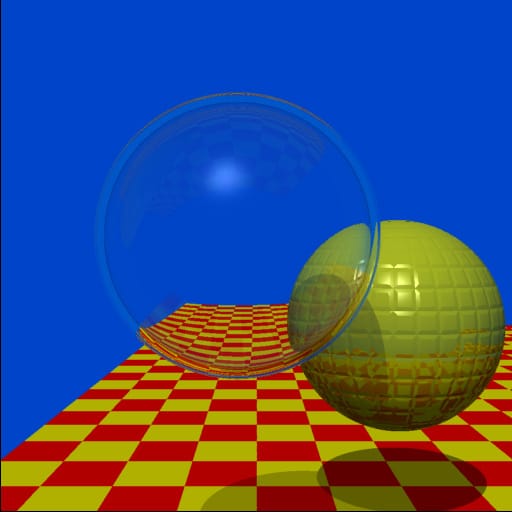

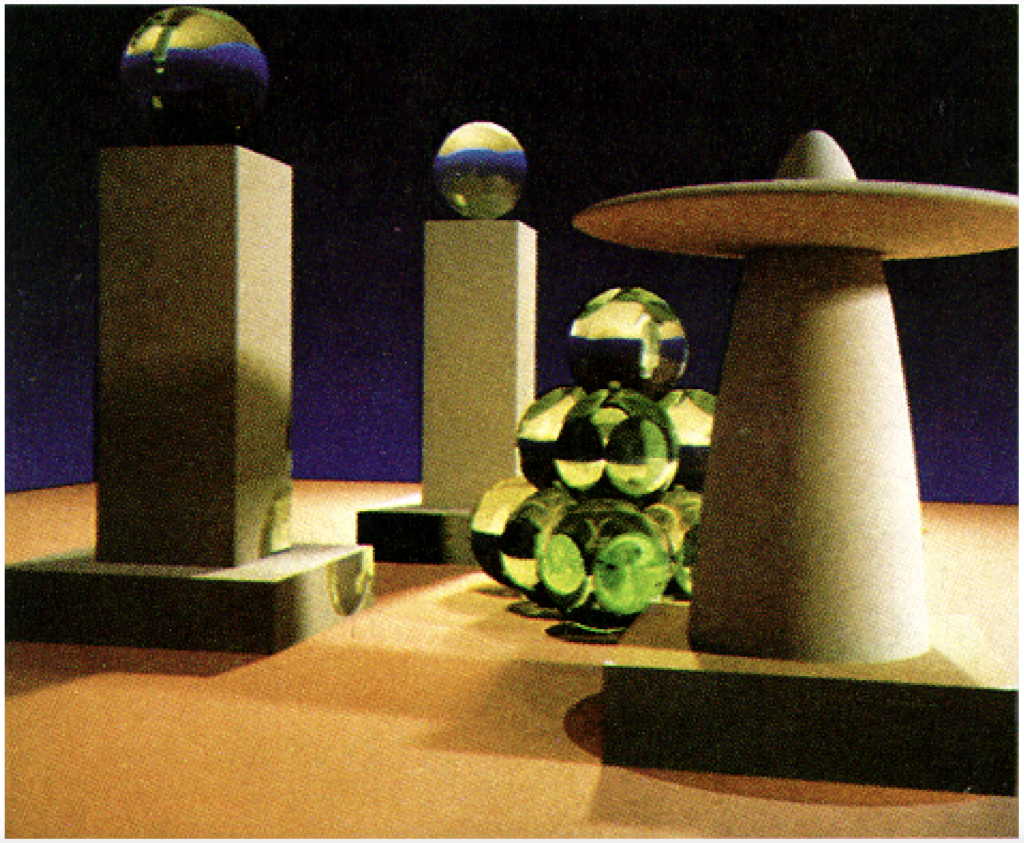

It led to 20+ years of rendering exploration. Having optical elements and synthetic cameras brought the creation of imagery ever closer to our real imaging techniques. Film cameras had given way to digital sensors, animators started making movies with 3D characters. Ray tracing precisely calculated crisp chrome reflections, sharp shadows and lovely refractive solid glass. Things that seemed unapproachable with raster z-buffers and scan line rendering became a few lines of recursive ray bouncing. It took a lot of computation to calculate, but folks would gladly tee up an overnight render to return to those lovely perspective perfect gleaming pixels.

Year over year, SIGGRAPH bubbled with new ray tracing tech: depth of field, motion blur, soft shadows, displacement maps, caustics, volumetrics, liquids, smoke, fire and flames. As ray tracers were extended, the evolution of lighting models ushered in the next generation of rendering. To recreate many of the properties of light an even more physically based approach was needed. Ray tracing captured the geometrically optical nature of materials extremely well but it became evident that the underlying shading models needed to be extended to cover more of the subtle interreflections of light and how that affected colors. In addition to extending the way light was handled, the way materials interacted with light needed to be more closely scrutinized.

Programmable shaders made these explorations possible to artists as well as researchers. A new dawn of Physically Based Rendering was ushered in where materials conserved energy, the spectral nature of light was respected, things were measured and rendered with SI radiometric units, the gamma response of the display device was taken into account and perceptual responses of the human observer were taken into account.

All this physical realism came with a high price tag: big budget productions in media and entertainment were spending vast resources on hardware, storage and computation as the bar of photorealism was raised ever higher. Budgets and artist time expanded for larger and larger projects. Rasterized pipelines started to fall by the wayside as only the increased realism and quality of ray traced projects became the norm. However, the rendering power of a standard artists workstation was not increasing at the exponential rate of the complexity of projects.

Physically Based Rendering

Content producers optimized pipelines, offshored tasks, automated processes and invested in IT infrastructure to improve their output and pacing. GPU performance was increasing, helping content creators immensely, but using GPUs for ray tracing was still in its infancy and could not be used for final production visuals.

The pinnacle of ray traced quality took a big step forward as new algorithms distributing light rays arrived. The technique was not new, it had been developed in the mid ‘80s but was too cost prohibitive computationally. The technique is called Path Tracing. What changed was now we had enough compute power to calculate all the Physically Based Rendering techniques and probabilistic models were applied to deliver rays optimally to match the distribution and shape of light as reflected from these accurate material models.

GPUs are also starting to be able to speed up some of the ray tracing calculations and more of the ancillary rendering processing and their efficiencies are growing at a very competitive rate. Allowing a full Path Tracing pipeline to converge results in images of stunning quality where the fidelity and subtlety of light and shadow are markedly enhanced over the results of standard Ray Tracing. The rigorous calculations remove noise, error and approximations in a render solution. Large production scenes are still too vast to be produced cost effectively on a single workstation. The quality of Path Tracing enhanced ray tracing on GPUs is now in its infancy.

The complexity of environments and characters in real time entertainment has increased dramatically but to reach interactive frame rates at big screen resolutions using Path Tracing still requires immense optimizations, expensive fine tuning and long development times to deliver a level of quality that doesn’t quite live up to customers expectations. Though they are quite willing to pay dearly for the results and will wait in line to buy it. This provides a healthy ecosystem to stimulate competitive development of techniques and solutions to push rendering forward ever more quickly.

Rendering Techniques Appendix

Rasterization

Rasterization is the part of a rendering pipeline where geometric objects are translated into color pixel values for storage and display. The process of using graphics hardware to transform, shade and draw 3D geometry is often referred to as Rasterization rendering.

In the late 70’s expensive raster framebuffers began to displace vector calligraphic displays for graphical output. The advent of hardware accelerated architectures such as Jim Clark’s Geometry Engine, gave way to the graphics workstations of the 80’s. (SGI, Apollo, Sun, etc.) The PC revolution commoditized 3D interaction with color displays and the introduction and evolution of GPUs. Raster graphics engines became the norm in PCs, laptops, game consoles and handheld devices.

A variety of APIs became available for developers to create 3D applications. These tools closely matched the abilities of the underlying hardware and the raster graphics pipeline became the standard method to render and interact with 3D content. The graphical APIs competed to provide faster and more attractive output while remaining price competitive in their respective markets. (scientific workstations, CAD, gaming, film and visual effects) The variety of 3D applications and markets expanded as more powerful hardware became available. The stable and diverse techniques of rasterized graphics met the needs of many markets and still remains the most cost effective means to deliver interactive solutions.

Ray Tracing

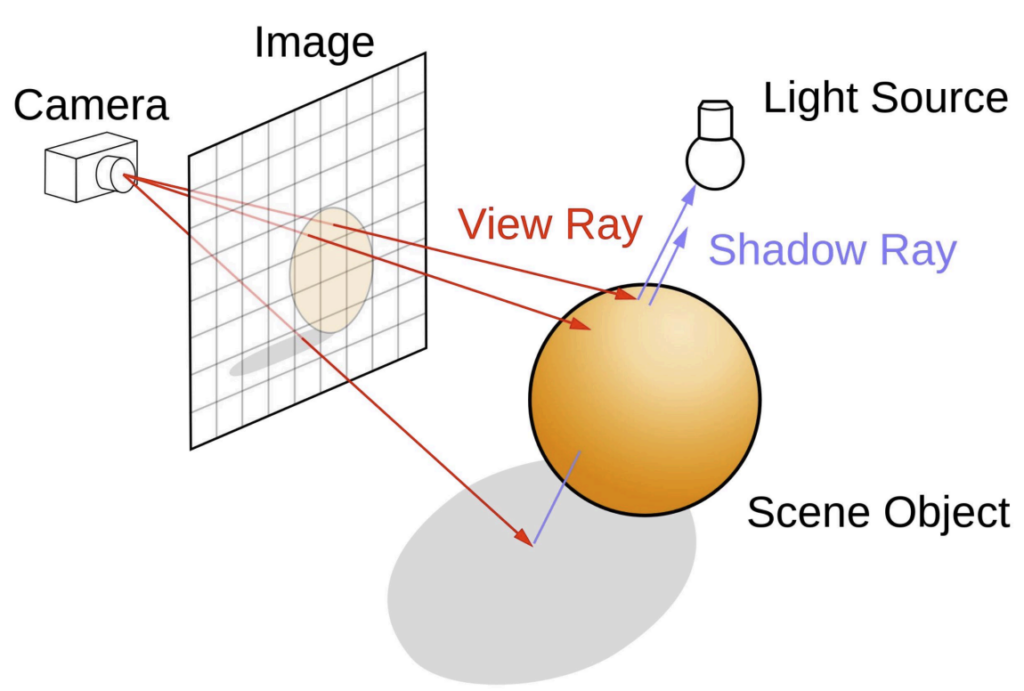

Ray tracing is a rendering technique based on geometric optics. It generates images by casting rays of light through a camera into a 3D scene and bouncing those rays off objects in the scene to determine the colors that reach the camera.

Using rays to simulate optical paths has been studied in many fields (optics, astronomy, navigation). Techniques from lens design, microscopy, and physical optics provide a variety of solutions for tracing rays through transparent objects, scattering light from reflective materials and determining a myriad of scene properties based on the intersection of rays with geometric models. This powerful paradigm for image synthesis opened up the field of computer graphics to pursue the physical interaction of light with our environment.

Ray tracing has been used by artists since the renaissance to reconstruct perfect perspective and to understand how 3D scenes are projected onto a 2D image. Newton used the refraction of rays to explain rainbows and before him Galileo traced rays through lenses to create the telescope.

The mathematics of perspective transformations are a core component of Introduction to Computer Graphics, the Thin Lens Equation and the Law of Reflection are standard items in first semester physics. We nearly take these foundational concepts for granted, however early computer graphics researchers began to apply these techniques for the first time in their blossoming field applying them to Computer Aided Design and to begin generating the first physically based rendering algorithms.

Ray tracing ushered in a deluge of computer graphics research. Introducing much more physically accurate illumination models and material representations led to ever more photorealistic results. This virtuous cycle has been the bedrock of progress in computer graphics research and continues to drive the leading techniques and algorithms in rendering.

Path Tracing

Path tracing is an advanced form of ray tracing that more accurately accounts for the diffuse interreflections of light and carefully shapes the distributions of light reflected from complex materials. Path tracing uses iterative probabilistic techniques to converge upon a noise free solution with very accurate sampling. This alleviates many image synthesis pitfalls including geometric jaggies, high frequency texture ringing, moire patterns and other aliasing issues. The algorithms are computationally expensive, but the subtlety of the results and the clean dynamic range that can be expressed are a big step forward towards truly physically accurate rendering.

The earliest incarnation of path tracing was described in Jim Kajiya’s seminal 1986 paper entitled: The Rendering Equation. This SIGGRAPH paper is arguably the root of all rendering papers in that Kajiya formally defines an integral equation that expresses the entirety of modern rendering theory. His equation combined the possible light paths and contributions that energy could make as it reflected amongst objects, rectifying diffuse interreflections, specular reflections and caustics. It removed the notion of considering lights as something different from geometry, invoking that all geometry could reflect and emit energy. This led to the demise of the point source, as all geometry including light sources must have a physical area.

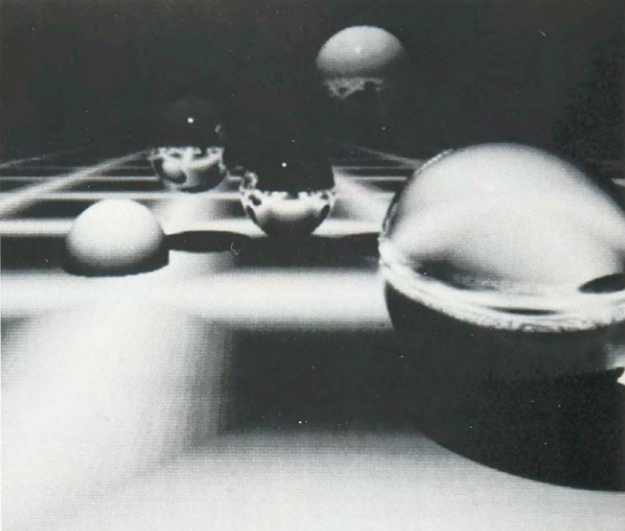

By explicitly splitting material attributes into both diffuse and specular components the structure was in place to develop advanced BRDFs to describe materials and the previously used ambient lighting terms could be done away with. To illustrate his unified rendering philosophy Kajiya implemented a brute force path tracer to solve his integral rendering equation. He was so far ahead of the curve at that time, it took 20 hours to render the 40 samples per pixel 512×512 image in figure #7. He introduced the notion of importance sampling and how a hierarchical k-d tree could be used to speed the calculations.

In 1997 Eric Veach’s Phd thesis: “ROBUST MONTE CARLO METHODS FOR LIGHT TRANSPORT SIMULATION” examined enough theory and techniques for everyone in the graphics industry to start practically moving towards path tracing. Metropolis light transport and bidirectional path tracing gave rendering scientists the tools to begin constructing solutions that took advantage of progressive solvers that could efficiently sample ray paths till they converge on a clean result. This along with the influx of general purpose programmable GPUs brought enough compute power to accelerate the calculations.

Today, path tracing continues to evolve and find uses across many industries.